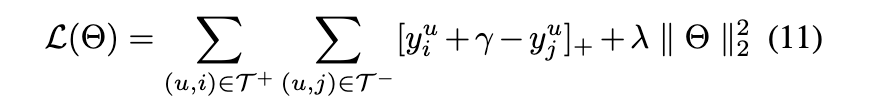

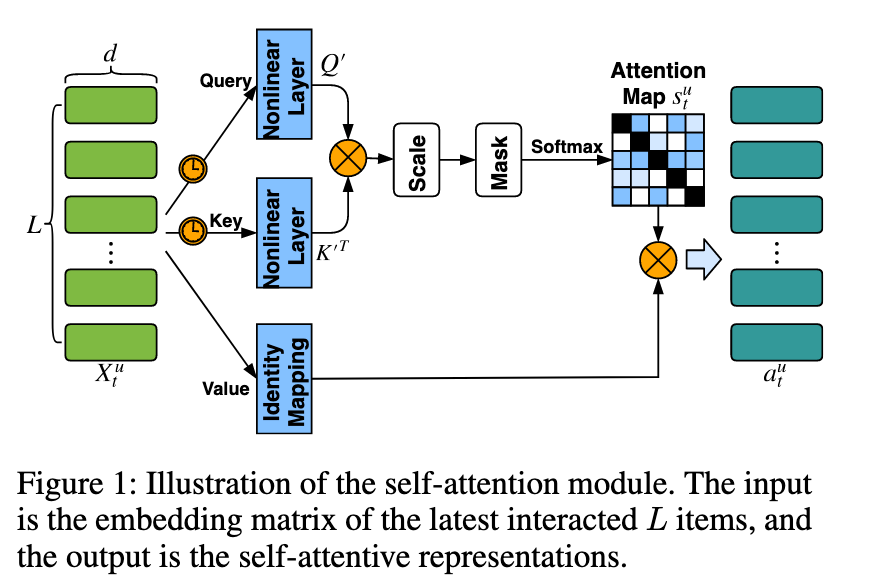

本论文主要主要是把self-attentive model运用在了next recommendation中,如以下图1所示,其中query=key=value=item embedding in this paper。

所谓的Self-Attentive就是将自己原来的表达通过一定的变换后,变成一个新的表达(规模跟原来一样,不过可以通过aggregation operation (e.g.,sum, max,and min) 将matrix变成vector, next item embedding 尽可能接近这个vector)。其中会学出一个权重矩阵(Attention Map)。

以上主要考虑了short-term相关信息。

然后long-term的信息则交给了Metric Learning(使得U和V尽可能近如果用户u与物品v有交互的话。)

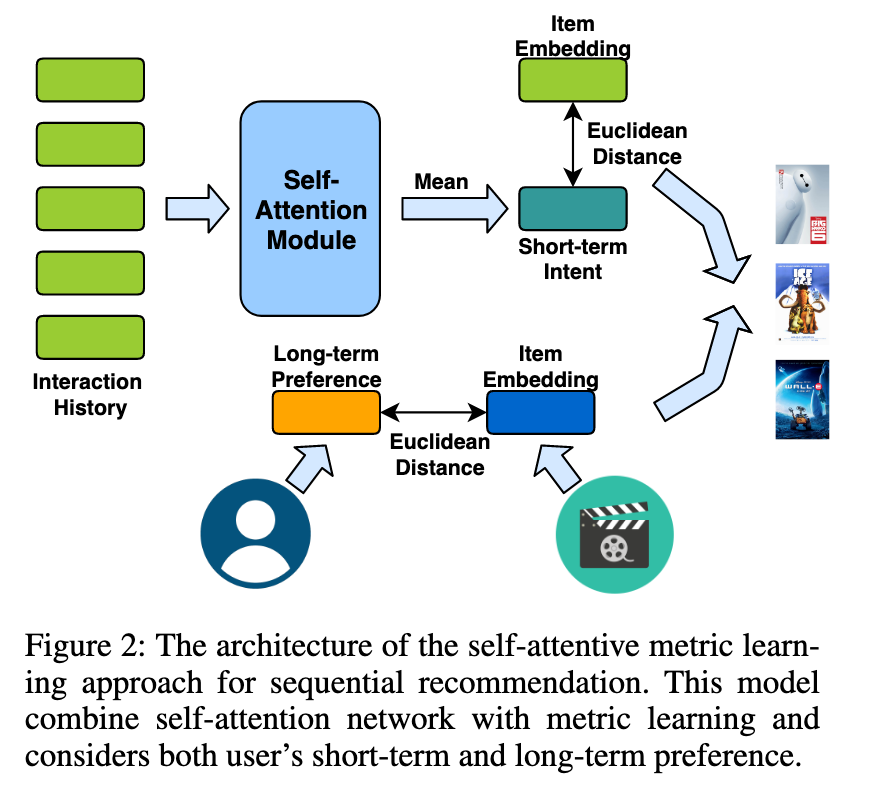

最后通过线性加权这两种情况的Loss=embedding 之间的距离。

Objective Function:思想由以上斜体部分共同组成,然后线性加权。

![]()

另外,本文中对于时间信号的融合虽然简单,但也值得借鉴。就是让时间和矩阵元素位置相关,加上一个与时间相关矩阵(用函数产生,如 TE(t,2i) =sin(t/100002i/d))。

| 文献题目 | 去谷歌学术搜索 | ||||||||||

| Next Item Recommendation with Self-Attentive Metric Learning | |||||||||||

| 文献作者 | Shuai Zhang | ||||||||||

| 文献发表年限 | 2019 | ||||||||||

| 文献关键字 | |||||||||||

| Next item; metric learning; long- and short-term; time signal; 时间信号 | |||||||||||

| 摘要描述 | |||||||||||

| In this paper, we propose a novel sequence-aware recommendation model. Our model utilizes self-attention mechanism to infer the item-item relationship from user’s historical interactions. With self-attention, it is able to estimate the relative weights of each item in user interaction trajectories to learn better representations for user’s transient interests. The model is finally trained in a metric learning framework, taking both local and global user intentions into consideration. Experiments on a wide range of datasets on different domains demonstrate that our approach outperforms the state-of-the-art by a wide margin. | |||||||||||